Google can spin the "Duplex" calling agent in a much more positive way

Submitted by brad on Tue, 2018-05-22 12:45Most of the world was wowed by the Google Duplex demo, where their system was able to cold-call a hairdresser and make an appointment with her, with the hairdresser unaware she was talking to an AI. The system included human speech mannerisms and the ability to respond to the random phrases the hairdresser through back.

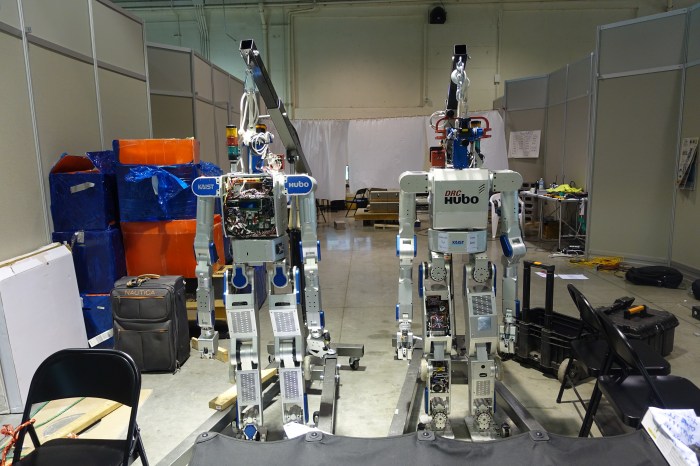

One can understand the appeal of presenting the simulation in a mostly real environment. But the advantages of the VR experience are many. In particular, with the top-quality, retinal resolution light-field VR we hope to see in the future, the big advantage is you don't need to make the physical things look real. You will have synthetic bodies, but they only have to feel right, and only just where you touch them. They don't have to look right. In particular, they can have cables coming out of them connecting them to external computing and power. You don't see the cables, nor the other manipulators that are keeping the cables out of your way (even briefly unplugging them) as you and they move.

One can understand the appeal of presenting the simulation in a mostly real environment. But the advantages of the VR experience are many. In particular, with the top-quality, retinal resolution light-field VR we hope to see in the future, the big advantage is you don't need to make the physical things look real. You will have synthetic bodies, but they only have to feel right, and only just where you touch them. They don't have to look right. In particular, they can have cables coming out of them connecting them to external computing and power. You don't see the cables, nor the other manipulators that are keeping the cables out of your way (even briefly unplugging them) as you and they move.

In August, I attended the World Science Fiction Convention (WorldCon) in London. I did it while in Coeur D'Alene, Idaho by means of a remote Telepresence Robot(*). The WorldCon is half conference, half party, and I was fully involved -- telepresent there for around 10 hours a day for 3 days, attending sessions, asking questions, going to parties. Back in Idaho I was speaking at a local robotics conference, but I also attended a meeting back at the office using an identical device while I was there.

In August, I attended the World Science Fiction Convention (WorldCon) in London. I did it while in Coeur D'Alene, Idaho by means of a remote Telepresence Robot(*). The WorldCon is half conference, half party, and I was fully involved -- telepresent there for around 10 hours a day for 3 days, attending sessions, asking questions, going to parties. Back in Idaho I was speaking at a local robotics conference, but I also attended a meeting back at the office using an identical device while I was there. If Satoshi could sell, it is hard to work out exactly when the time to sell would be. Bitcoin has several possible long term fates:

If Satoshi could sell, it is hard to work out exactly when the time to sell would be. Bitcoin has several possible long term fates: