Comparing the Uber and Tesla fatalities with a table

The Uber car and Tesla's autopilot, both in the news for fatalities are really two very different things. This table outlines the difference. Also, see below for some new details on why the Tesla crashed and more.

| Uber ATG Test | Tesla Autopilot |

|---|---|

| A prototype full robocar capable (eventually) of unmanned operations on city streets. | A driver assist system for highways and expressways |

| Designed for taxi service | Designed for privately owned and driven cars |

| A full suite of high end roobcar sensors including LIDAR | Production automotive sensors: Primarily cameras and radar. |

| 1 pedestran fatality, other accidents unknown | Fatalities in Florida, China, California, other serious crashes without injury |

| Approximately 3 million miles of testing | Late 2016: 300M miles, 1.3B miles data gathering. |

| A prototype in testing which needs a human safety driver monitoring it | A production product overseen by the customer |

| Designed to handle everything it might encounter on the road | Designed to handle only certain situations. Users are expressly warned it doesn't handle major things like cross traffic, stop signs and traffic lights. |

| Still in an early state, needing intervention every 13 miles on city streets | In production and needing intervention rarely on highways but if you tried to drive it on city streets it would need it very frequently; perhaps every intersection |

| Needs state approval for testing with rules requiring safety drivers. Arizona approval withdrawn, California expired | Not regulated, similar to the adaptive cruise control/lanekeep that it is based on |

| Only Uber employees can get behind the wheel | Anybody can be behind the wheel |

| Vehicle failed in manner outside its design constraints -- it should have readily detected and stopped for the pedestrian | Vehicles had incidents in ways expected under their design constraints |

| Vehicle was trusted too much by safety driver, took eyes off road for 5 seconds | Vehicles trusted too much by drivers, took eyes off road for 6 seconds or longer |

| Safety drivers get 3 weeks training, fired if caught using a phone | No training or punishments for customers, though manual and screen describe proper procedures for operating |

| Safety driver recorded with camera, no warnings by software of inattention | Tesla drivers get visible, then audibile alerts if they take hands off the wheel for too long |

| Criticism that solo safety driver job is too hard, that inattention will happen | Criticism that drivers are overtrusting the system, regularly not looking at the road |

| Killed a pedestrian, though car had right of way | Killed customers who were ignoring monitoring requirements -- no bystanders killed at present |

| NTSB Investigating | NTSB Investigating |

Each company faces a different challenge to fix its problems. For Uber, they need to improve the quality of their self-drive software so that such a basic failure as we saw here is extremely unlikely. Perhaps even more importantly, they need to revamp their safety driver system so that safety driver alertness is monitored and assured, including going back to two safety drivers in all situations. Further, they should consider some "safety driver assist" technology, such as the use of the system in the Volvo (or some other aftermarket system) to provide alerts to the safety drivers if it looks like something is going wrong. That's not trivial -- if the system beeps too much it gets ignored, but it can be done.

For Tesla, they face a more interesting challenge. Their claim is that in spite of the accidents, the autopilot is still a net win. That because people who drive properly with autopilot have half the accidents of people who drive without it, the total number of accidents is still lower, even if you include the accidents, including these fatalities, which come to those who disregard the warnings about how to properly use it.

That people disregard those warnings is obvious and hard to stop. Tesla argues, however, that turning off Autopilot because of them would make Telsa driving and the world less safe. For them, options exist to make people drive diligently with the autopilot, but they must not make the autopilot so much less pleasant such that people decide to not use it even properly. That would actually make driving less safe if enough people did that.

Why the Tesla crashed

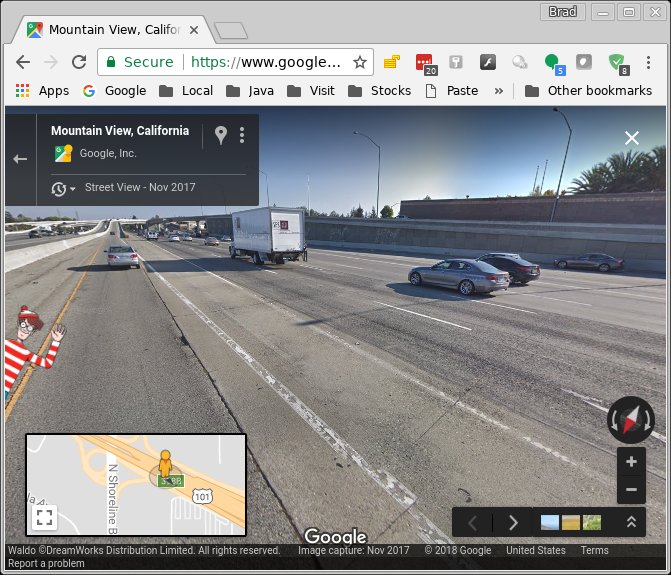

A theory, now given credence by some sample videos, suggests the Telsa was confused by the white lines which divide the road at an off-ramp, the expanding triangle known as the "gore." As the triangle expands, a simple system might think they were the borders of a lane. Poor lane marking along the gore might make the vehicle even think the new "lane" is a continuation of the lane the car is in, making the car try to drive the lane -- right into the barrier.

A theory, now given credence by some sample videos, suggests the Telsa was confused by the white lines which divide the road at an off-ramp, the expanding triangle known as the "gore." As the triangle expands, a simple system might think they were the borders of a lane. Poor lane marking along the gore might make the vehicle even think the new "lane" is a continuation of the lane the car is in, making the car try to drive the lane -- right into the barrier.

This video made by Tesla owners near Indiana, shows a Telsa doing this when the right line of the gore is very washed out compared to the left. At 85/101 (the recent Tesla crash) the lines are mostly stronger but there is a 30-40 foot gap in the right line which perhaps could trick a car into entering and following the gore. The gore at 85/101 also is lacking the chevron "do not drive here" stripes often found at these gores. It is not good at stationary objects like the crumple barrier, but its warning stripes are something that should be in its classification database.

Once again, the Tesla is just a smart cruise control. It is going to make mistakes like this, which is why they tell you you have to keep watching. Perhaps crashes like this will make people do that.

The NTSB is angry that Tesla released any information. I was not aware they frowned on this. This may explain Uber's silence during the NTSB investigation there.

Comments

Anonymous

Tue, 2018-04-03 07:21

Permalink

One intervention every 13 miles statistic.

"Still in an early state, needing intervention every 13 miles on city streets."

I've seen the 13 miles figure quoted in another article, but in that case the author referred to it as miles per disengagement, and in the comments accompanying that article someone pointed out that the figure was pretty useless without knowing what types of disengagements Uber is counting.

Are they counting routine disengagements where the vehicle arrives at destination, parks safely, and the automatically software automatically disengages? Situations where the vehicle is functioning normally, but the driver chooses to take manual control (eg driving around the block because it can't see an appropriate place to park at it's destination, or too timid when attempting to change lanes when behind a vehicle which is double parked)? To me those type of situations are very different to safety driver needs to take manual control to prevent an accident, or because they feel the car is driving inappropriately for the circumstances.

Do you have a source for "needing intervention every 13 miles on city streets"? Does it offer an information on why the drivers intervened? (Also just how bad is the traffic on those city streets? Does that one intervention translate to one every 30 minutes, or is it more like one every two hours?

brad

Tue, 2018-04-03 11:00

Permalink

NYT story

Uber has not published the number, but the NYT reported that internal sources they spoke to within the company revealed it, but I have not seen a public description of Uber's "rules of disengagement." Just that the number was way low and they were behind schedule at getting it up.

If you count all disengagements, yes, you count the stops and pee brakes. Not destination arrivals if it is able to park (which I doubt they even have as yet.)

More sophisticated systems are counting only unplanned disengagements -- interventions and software faults. And a really sophisticated system also counts "safety disengagements" -- places where a contact or ROW violation would have happened without the intervention.

wallace

Tue, 2018-04-03 15:25

Permalink

UBER accident

My previous company used to with the Army in LIDAR and radar, and the video of the UBER crash makes no sense to me.

It seemed the LIDAR should have easily detected the lady walking the bike across the road. She had to walk the bike across another lane before being in the lane the UBER was driving on. The LIDAR should have had plenty of field of view, resolution and range to detect this. It is almost the LIDAR was not functional. I dont understand how there could a software issue? There was no fog or rain, so the reflections should have been fine. And there was nothing between her and the UBER so no excuse there either...

Steve

Wed, 2018-04-04 01:29

Permalink

NTSB attitude disappointing

I don’t know the background and how the legal system works, but I would prefer good faith continuous disclosure.

The public should have a right to know what causes these accidents as soon as practicable. This is primarily in the interests of public safety. If there are potential problems that could be repeated, they need to communicated early, even if the cause(s) are found to be somewhat different after a full investigation.

Erik Vinkhuyzen

Fri, 2018-04-06 13:35

Permalink

Tesla Autopilot safety

Do you know how, exactly, Tesla arrived at the conclusion that its Autopilot is safer than human driving?

It seems to be that the obvious way to calculate is to divide 'number of accidents while in autopilot'/'number of miles driven in Auto pilot' and then to compare that with a simple statistic of 'number of accidents per highway miles driven.' (the highway is important, and I hope they use).

If that is indeed the way they calculate their safety record I think it paints a very rosy picture. Because in most cases, when the Autopilot starts to fail (i.e., when a dangerous situation arises) the human driver takes over, and then whatever happens next will not be counted against the Tesla Autopilot's safety statistics. That would skew the statistics immensely. I have driven the Autopilot and it worked fine most of the time. When it did not, and things got hairy, I took over. If you would compare those miles with the miles on supervised Autopilot you are comparing two very different things, as I took over in a situation that was caused by the Autopilot, but I had to deal with. Do you know how this statistic is calculated? Does my argument hold any water?

brad

Fri, 2018-04-06 16:15

Permalink

That's it

They compare rates with and without autopilot, and rates with autopilot (including the people who smash into walls like this) are better. So it is a net win. Society is rarely so utilitarian, however.

dan

Fri, 2018-04-06 17:30

Permalink

so what exactly are the numbers?

With autopilot on, we apparently have 3 fatalities listed above in the article (Florida, China, California), although in the China fatality I don't think Tesla has confirmed that autopilot was on. With autopilot off, how many fatalities are there? What percentage of miles are driven with autopilot on?

For non-fatal autopilot accidents, we have the Southern California firetruck crash, and a Sept 21, 2017 Hayward California gore-point crash, and a Dallas, TX center-divider scrape, and not sure what else. Here's a link to the Hayward new story: http://abc7news.com/automotive/i-team-exclusive-tesla-crash-in-september-showed-similarities-to-fatal-mountain-view-accident/3302389/

How do these compare with non-fatal accidents with autopilot off?

brad

Fri, 2018-04-06 18:05

Permalink

All tesla accidents

I have not seen a complete list of Tesla accidents. We do know the rough statistics for all cars -- Fatality ever 80M miles, (every 180M on highway), injury every 12M, police accident every 500K, insurance accident every 250K, "ding" every 100K -- the last one is only something recently come to life.

dan

Fri, 2018-04-06 19:33

Permalink

some figures

There are some figures in this article: https://www.greencarreports.com/news/1107109_teslas-own-numbers-show-autopilot-has-higher-crash-rate-than-human-drivers

brad

Fri, 2018-04-06 20:10

Permalink

From Nov 2016

That data is a bit old. Tesla autopilot miles are up a great deal since then, as are regular miles. Fatalities are too small in number to get good stats, you would like data on all accidents to make a real conclusion.

However, as long as the number is in range of the number without autopilot (or even for other cars) there is an argument that it should continue. For this is the only way products get better, by starting out worse. The question we would ask is, "What risk rate can we tolerate from the 1st generation of a product?" and I think "similar to human driving" would be a likely answer. We might even tolerate slightly worse, if the people at risk willingly accepted the risk. So far the Tesla fatalities have all been Tesla occupants, but that's not always going to be the case, though.

Erik Vinkhuyzen

Sun, 2018-04-08 12:46

Permalink

Autopilot safety

Do you not think that if Tesla wants to claim that the Autopilot is safer than human driving, than they really should compare 'unsupervised' autopilot with regular driving. Comparing supervised autopilot is jusnot right, because if people take over when the Autopilot messes up you are only counting the 'good moments'--small wonder they come out so favorably. Of course Tesla doesn't have the statistics for unsupervised autopilot, but recent accidents clearly indicate that unsupervised autopilot is a really bad idea. Saying Autopilot makes traffic safer is nonsense if the technology needs to be supervised constantly.

brad

Mon, 2018-04-09 12:09

Permalink

No, that's not right

Unsupervised autopilot is clearly inferior, markedly so. While I am sure they measure that there is little doubt of its inferiority. What Tesla claims is that supervised autopilot including mistakes by both the autopilot and the supervisor is superior to ordinary driving. And if so, there is an argument that it is not a really bad idea. The whole point of autopilot is it needs supervision. It is definitely dangerous to use it unsupervised, and Tesla says this all the time.

Erik Vinkhuyzen

Mon, 2018-04-09 12:51

Permalink

Autopilot safety

Thanks for the clarification. I wonder if it is really the technology's capability that is making things safer. I rather think that the reason why the supervised Autopilot enhances safety is because it basically instills the attitude in the driver to stay in their lane, and just be patiently following the car in front. That is the right attitude to have in traffic and if Autopilot instills that because that is when it works best, I am all for it. The question is how to make sure people don't rely on the Autopilot and start doing other things. Disabling Autosteer would probably make the car safer still. They should try it as an experiment.

brad

Mon, 2018-04-09 15:27

Permalink

That may play a role

Better driving "hygiene" may play a role, but I think that Tesla would say that autopilot+driver is more likely to cause lane departures and sudden slowing of the vehicle in front of you than driver alone. In other words, the same benefit as provided by lanekeeping and forward collision warning/avoidance, but increased even further. FCW is documented to cause reductions in accident frequency and severity. Autopilot is super-FCW and so might claim to do that even better.

Anthony

Sun, 2018-04-08 18:13

Permalink

Apples and aardvarks

"…perhaps the only valid comparative benchmark for the Autopilot Tesla is the non-Autopilot Tesla."

I'm not sure even that is a valid comparison, as Autopilot may not be enabled during a representative sample of Tesla usage. But yeah, comparing Autopilot Tesla miles to average statistics for all cars is not a valid comparison.

brad

Mon, 2018-04-09 12:11

Permalink

Tesla has data

On where you drive, so while I don't know if this is what they have done, they have the power to compare autopilot on driving with autopilot off driving on the same stretches of roads. In general, highway driving has lower accident rates per mile (half in fact) and autopilot is used primarily on highway, so if they tried to compare highway with city, that would be a very obvious error.

Add new comment