Can't we make overbooking more efficient and less painful with our mobile devices?

Submitted by brad on Mon, 2017-07-24 12:33I've written before about overbooking and how it's good for passengers as well as for the airlines. If we have a service (airline seats, rental cars, hotel rooms) where the seller knows it's extremely likely that with 100 available slots, 20 will not show up, we can have two results:

Level zero is just the existing rider on horseback.

Level zero is just the existing rider on horseback. Level one is the traditional horse drawn carriage or coach, as has been used for many years.

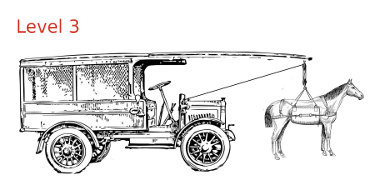

Level one is the traditional horse drawn carriage or coach, as has been used for many years. In a level 3 carriage, sometimes the horses will provide the power, but it is allowed to switch over entirely to the "motor," with the

horses stepping onto a platform or otherwise being raised to avoid working them. If the carriage approaches an area it can't handle, or the motor has problems,

the horses should be ready, with about 10-20 seconds notice, to step back on the ground and start pulling. In some systems the horse(s) can be in a hoist which can raise or lower them from the trail.

In a level 3 carriage, sometimes the horses will provide the power, but it is allowed to switch over entirely to the "motor," with the

horses stepping onto a platform or otherwise being raised to avoid working them. If the carriage approaches an area it can't handle, or the motor has problems,

the horses should be ready, with about 10-20 seconds notice, to step back on the ground and start pulling. In some systems the horse(s) can be in a hoist which can raise or lower them from the trail.

There are several things notable about Waymo's pilot:

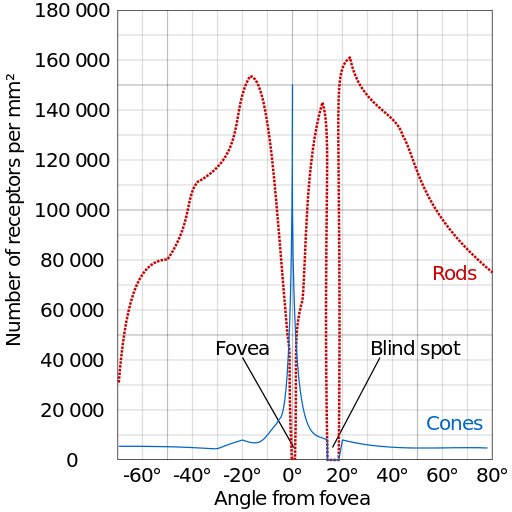

There are several things notable about Waymo's pilot: My thought is to combine foveal video with animated avatars for brief moments after

My thought is to combine foveal video with animated avatars for brief moments after