Yikes - even Barack Obama wants to solve robocar "Trolley Problems" now

I had hoped I was done ranting about our obsession with what robocars will do in no-win "who do I hit?" situations, but this week, even Barack Obama in his interview with Wired opined on the issue, prompted by my friend Joi Ito from the MIT Media Lab. (The Media Lab recently ran a misleading exercise asking people to pretend they were a self-driving car deciding who to run over.)

I've written about the trouble with these problems and even proposed a solution but it seems there is still lots of need to revisit this. Let's examine why this problem is definitely not important enough to merit the attention of the President or his regulators, and how it might even make the world more dangerous.

We are completely fascinated by this problem

Almost never do I give a robocar talk without somebody asking about this. Two nights ago, I attended another speaker's talk and he got the question as his 2nd one. He looked at his watch and declared he had won a bet with himself about how quickly somebody would ask. It has become the #1 question in the mind of the public, and even Presidents.

It is not hard to understand why. Life or death issues are morbidly attractive to us, and the issue of machines making life or death decisions is doubly fascinating. It's been the subject of academic debates and fiction for decades, and now it appears to be a real question. For those who love these sorts of issues, and even those who don't, the pull is inescapable.

At the same time, even the biggest fan of these questions, stepping back a bit, would agree they are of only modest importance. They might not agree with the very low priority that I assign, but I don't think anybody feels they are anywhere close to the #1 question out there. As such we must realize we are very poor at judging the importance of these problems. So each person who has not already done so needs to look at how much importance they assign, and put an automatic discount on this. This is hard to do. We are really terrible at statistics sometimes, and dealing with probabilities of risk. We worry much more about the risks of a terrorist attack on a plane flight than we do about the drive to the airport, but that's entirely wrong. This is one of those situations, and while people are free to judge risks incorrectly, academics and regulators must not.

Academics call this the Law of triviality. A real world example is terrorism. The risk of that is very small, but we make immense efforts to prevent it and far smaller efforts to fight much larger risks.

These situations are quite rare, and we need data about how rare they are

In order to judge the importance of these risks, it would be great if we had real data. All traffic fatalities are documented in fairly good detail, as are many accidents. A worthwhile academic project would be to figure out just how frequent these incidents are. I suspect they are extremely infrequent, especially ones involving fatality. Right now fatalities happen about every 2 million hours of driving, and the majority of those are single car fatalities (with fatigue and alcohol among leading causes.) I have still yet to read a report of a fatality or serious injury that involved a driver having no escape, but the ability to choose what they hit with different choices leading to injuries for different people. I am not saying they don't exist, but first examinations suggest they are quite rare. Probably hundreds of billions of miles, if not more, between them.

Those who want to claim they are important have the duty to show that they are more common than these intuitions suggest. Frankly, I think if there were accidents where the driver made a deliberate decision to run down one person to save another, or to hurt themselves to save another, this would be a fairly big human interest news story. Our fascination with this question demands it. Just how many lives would be really saved if cars made the "right" decision about who to hit in the tiny handful of accidents where they must hit somebody?

In addition, there are two broad classes of situations. In one, the accident is the fault of another party or cause, and in the other, it is the fault of the driver making the "who to hit" decision. In the former case, the law puts no blame on you for who you hit if forced into the situation by another driver. In the latter case, we have the unusual situation that a car is somehow out of control or making a major mistake and yet still has the ability to steer to hit the "right" target.

These situations will be much rarer for robocars

Unlike humans, robocars will drive conservatively and be designed to avoid failures. For example, in the MIT study, the scenario was often a car whose brakes had failed. That won't happen to robocars -- ever. I really mean never. Robocar designs now all commonly feature two redundant braking systems, because they can't rely on a human pumping the hydraulics manually or pulling an emergency brake. In addition, every time they apply the brakes, they will be testing them, and at the first sign of any problem they will go in for repair. The same is true of the two redundant steering systems. Complete failure should be ridiculously unlikely.

The cars will not suddenly come upon a crosswalk full of people with no time to stop -- they know where the crosswalks are and they won't drive so fast as to not be able to stop for one. They will be also constantly measuring traction and road conditions to assure they don't drive too fast for the road. They won't go around blind corners at high speeds. They will have maps showing all known bottlenecks and construction zones. Ideally new construction zones will only get created after a worker has logged the zone on their mobile phone and the updates are pushed out to cars going that way, but if for some reason the workers don't do that, the first car to encounter the anomaly will make sure all other cars know.

This does not mean the cars will be perfect, but they won't be hitting people because they were reckless or had predictable mechanical failures. Their failures will be more strange, and also make it less likely the vehicle will have the ability to choose who to hit.

To be fair, robocars also introduce one other big difference. Humans can argue that they don't have time to think through what they might do in a split-second accident decision. That's why when they do hit things, we call them accidents. They clearly didn't intend the result. Robocars do have the time to think about it, and their programmers, if demanded to by the law, have the time to think about it. Trolley problems demand the car be programmed to hit something deliberately. The impact will not be an accident, even if the cause was. This puts a much higher standard on the actions of the robocar. One could even argue it's an unfair standard, which will delay deployment if we need to wait for it.

In spite of what people describe in scenarios, these cars won't leave their right of way

It is often imagined an ethical robocar might veer into the oncoming lane or onto the sidewalk to hit a lesser target instead of a more vulnerable one in its path. That's not impossible, but it's pretty unlikely. For one, that's super-duper illegal. I don't see a company, unless forced to do so, programming a car to ever deliberately leave its right of way in order to hit somebody. It doesn't matter if you save 3 school buses full of kids, deliberately killing anybody standing on the sidewalk sounds like a company-ruining move.

For one thing, developers just won't put that much energy into making their car drive well on the sidewalk or in oncoming traffic. They should not put their energies there! This means the cars will not be well tested or designed when doing this. Humans are general thinkers, we can handle driving on the grass even though we have had little practice. Robots don't quite work that way, even ones designed with machine learning.

This limits most of the situations to ones where you have a choice of targets within your right-of-way. And changing lanes is always more risky than staying in your lane, especially if there is something else in the lane you want to change to. Swerving if the other lane is clear makes sense, but swerving into an occupied lane is once again something that is going to be uncharted territory for the car.

By and large the law already has an answer

The vehicle code is quite detailed about who has right-of-way. In almost every accident, somebody didn't have it and is the one at fault under the law. The first instinct for most programmers will be to have their car follow the law and stick to their ROW. To deliberately leave your ROW is a very risky move as outlined above. You might get criticized for running over jaywalkers when you could have veered onto the sidewalk, but the former won't be punished by the law and the latter can be. If people don't like the law, they should change the law.

The lesson of the Trolley problem is "you probably should not try to solve trolley problems."

Ethicists point out correctly that Trolley problems may be academic exercises, but are worth investigating for what they teach. That's true in the classroom. But look at what they teach! From a pure "save the most people" utilitarian standpoint, the answer is easy -- switch the car onto the track to kill one in order to save 5. But most people don't pick that answer, particularly in the "big man" version where you can push a big man standing with you on a bridge onto the tracks to stop the trolley and save the 5. The problem teaches us we feel much better about leaving things as they are than in overtly deciding to kill a bystander. What the academic exercise teaches us is that in the real world, we should not foist this problem on the developers.

If it's rare and a no-win situation, do you have to solve it?

Trolley problems are philosophy class exercises to help academics discuss ethical and moral problems. They aren't guides to real life. In the classic "trolley problem" we forget that none of it happens unless a truly evil person has tied people to a railway track. In reality, many would argue that the actors in a trolley problem are absolved of moral responsibility because the true blame is on the setting and its architect, not them. In philosophy class, we can still debate which situation is more or less moral, but they are all evil. These are "no win" situations, and in fact one of the purposes of the problems is they often describe situations where there is no clear right answer. All answers are wrong, and people disagree about which is most wrong.

If a situation is rare, and it takes effort to figure out which is the less wrong answer, and things will still be wrong after you do this even if you do it well, does it make sense to demand an answer at all? To individuals involved, yes, but not to society. The hard truth is that with 1.2 million auto fatalities a year -- a number we all want to see go down greatly -- it doesn't matter that much to society whether, in a scenario that happens once every few years, you kill 2 people or 3 while arguing which choice was more moral. That's because answering the question, and implementing the answer, have a cost.

Every life matters, but we regularly make decisions like this. We find things that are bad and rare, and we decide that below a certain risk threshold, we will not try to solve them unless the cost is truly zero. And here the cost is very far from zero. Because these are no-win situations and each choice is wrong, each choice comes with risk. You may work hard to pick the "right" choice and end up having others declare it wrong -- all to make a very tiny improvement in safety.

At a minimum each solution will involve thought and programming, as well as emotional strain for those involved. It will involve legal review and in the new regulations, certification processes and documentation. All things that go into the decision must be recorded and justified. All of this is untrod legal ground making it even harder. In addition, no real scenario with match hypothetical situations exactly, so the software must apply to a range of situations and still do the intended thing (let alone the right thing) as the situation varies. This is not minor.

Nobody wants to solve it

In spite of the fascination these problems hold, coming up with "solutions" to these no-win situations are the last things developers want to do. In articles about these problems, we almost always see the statement, "Who should decide who the car will hit?" The answer is nobody wants to decide. The answer is almost surely wrong in the view of some. Nobody is going to get much satisfaction or any kudos for doing a good job, whatever that is. Combined with the rarity of these events compared to the many other problems on the table, solving ethical issues is very, very, very low on the priority list for most teams. Because developers and vendors don't want to solve these questions and take the blame for those solutions, it makes more sense to ask policymakers to solve what needs to be solved. As Christophe von Hugo of Mercedes put it, "99% of our engineering work is to prevent these situations from happening at all."

The cost of solving may be much higher than people estimate

People grossly underestimate how hard some of these problems will be to solve. Many of the situations I have seen proposed actually demand that cars develop entirely new capabilities that they don't need except to solve these problems. In these cases, we are talking about serious cost, and delays to deployment if it is judged necessary to solve these problems. Since robocars are planned as a life-saving technology, each day of delay has serious consequences. Real people will be hurt because of these delays aimed at making a better decision in rare hypothetical situations.

Let's consider some of the things I have seen:

- Many situations involve counting the occupants of other cars, or counting pedestrians. Robocars don't otherwise have to do this, nor can they easily do it. Today it doesn't matter if there are 2 or 3 pedestrians -- the only rule is not to hit any number of pedestrians. With low resolution LIDAR or radar, such counts are very difficult. Counts inside vehicles are even harder.

- One scenario considers evaluating motorcyclists based on whether they are wearing helmets. I think this one is ridiculous, but if people take it seriously it is indeed serious. This is almost impossible to discern from a LIDAR image and can be challenging even with computer vision.

- Some scenarios involve driving off cliffs or onto sidewalks or otherwise off the road. Most cars make heavy use of maps to drive, but they have no reason to make maps of off-road areas at the level of detail that goes into the roads.

- More extreme scenarios compare things like children vs. adults, or school-buses vs. regular ones. Today's robocars have no reason to tell these apart. And how do you tell a dwarf adult from a child? Full handling of these moral valuations requires human level perception in some cases.

- Some suggestions have asked cars to compare levels of injury. Cars might be asked to judge the difference between a fatal impact and one that just breaks a leg.

These are just a few examples. A large fraction of the hypothetical situations I have seen demand some capability of the cars that they don't have or don't need to have just to drive safely.

The problem of course is there are those who say that one must not put cars on the road until the ethical dilemmas have been addressed. Not everybody says this but it's a very common sentiment, and now the new regulations demand at least some evaluation of it. No matter how much the regulations might claim they are voluntary, this is a false claim, and not just because some states are already talking about making them more mandatory.

Once a duty of care has been suggested, especially by the government, you ignore it at your peril. Once you know the government -- all the way to the President -- wants you to solve something, then you must be afraid you will be asked "why didn't you solve that one?" You have to come up with an answer to that, even with voluntary compliance.

The math on this is worth understanding. Robocars will be deployed slowly into society but that doesn't matter for this calculation. If robocars are rare, they can prevent only a smaller number of accidents, but they will also encounter a correspondingly smaller number of trolley problems. What matters is how many trolley situations there are per fatality, and how many people you can save with better handling of those problems. If you get one trolley problem for every 1,000 or 10,000 fatalities, and robocars are having half the fatalities, the math very clearly says you should not accept any delay to work on these problems.

The court of public opinion

The real courts may or may not punish vendors for picking the wrong solution (or the default solution of staying in your lane) in no-win situations. Chances are there will be a greater fear of the court of public opinion. There is reason to fear the public would not react well if a vehicle could have made an obviously better outcome, particularly if the bad outcome involves children or highly vulnerable road users vs. adults and at-fault or protected road users.

Because of this I think that many companies will still try to solve some of these problems even if the law puts no duty on them. Those companies can evaluate the risk on their own and decide how best to mitigate it. That should be their decision.

For a long time, many people felt any robocar fatality would cause uproar in the public eye. To everybody's surprise, the first Tesla autopilot deaths resulted in Tesla stock rising for 2 months, even with 3 different agencies doing investigations. While the reality of the Tesla is that the drivers bear much more responsibility than a full robocar would, the public isn't very clear on that point, so the lack of reaction is astonishing. I suspect companies will discount this risk somewhat after this event.

This is a version 2 feature, not a version 1 feature

As noted, while humans make split-second "gut" decisions and we call the results accidents, robocars are much more intentional. If we demand they solve these problems, we ask something of them and their programmers that we don't ask of human drivers. We want robocars to drive more safely than humans, but we also must accept that the first robocars to be deployed will only be a little better. The goal is to start saving lives and to get better and better at it as time goes by. We must consider the ethics of making the problem even harder on day one. Robocars will be superhuman in many ways, but primarily at doing the things humans do, only better. In the future, we should demand these cars meet an even higher standard than we put on people. But not today: The dawn of this technology is the wrong time to also demand entirely new capabilities for rare situations.

Performing to the best moral standards in rare situations is not something that belongs on the feature list for the first cars. Solving trolley situations well is in the "how do we make this perfect?" problem set, not the "how do we make this great?" set. It is important to remember how the perfect can be the enemy of the good and to distinguish between the two. Yes, it means accepting there are low chance that somebody could be hurt or die, but people are already being killed, in large numbers, by the human drivers we aim to replace.

So let's solve trolley problems, but do it after we get the cars out on the road both saving lives and teaching us how to improve them further.

What about the fascination?

The over-fascination with this problem is a real thing even if the problem isn't. Studies have displayed one interesting result after surveying people: When you ask people what a car should do for the good of society, they would want it to sacrifice its passenger to save multiple pedestrians, especially children. On the other hand if you ask people if they would buy a car that did that, far fewer said yes. As long as the problem is rare, there is no actual "good of society" priority; the real "good of society" comes from getting this technology deployed and driving safely as quickly as possible. Mercedes recently announced a much simpler strategy which does what people actually want, and got criticism for it. Their strategy is reasonable -- they want to save the party they can be most sure of saving, namely the passengers. They note that they have very little reliable information on what will happen in other cars or who is in them, so they should focus not on a guess of what would save the most people, but what will surely save the people they know about.

What should we do?

I make the following concrete recommendations:

- We should do research to determine how frequent these problems are, how many have "obvious" answers and thus learn just how many fatalities and injuries might be prevented by better handling of these situations.

- We should remove all expectation on first generation vehicles that they put any effort into solving the rare ones, which may well be all of them.

- It should be made clear there is no duty of care to go to extraordinary lengths (including building new perception capabilities) to deal with sufficiently rare problems.

- Due to the public over-fascination, vendors may decide to declare their approaches to satisfy the public. Simple approaches should be encouraged, at in the early years of this technology, almost no answer should be "wrong."

- For non-rare problems, governments should set up a system where developers/vendors can ask for rulings on the right behaviour from the policymakers, and limit the duty of care to following those rulings.

- As the technology matures, and new perception abilities come online, more discussion of these questions can be warranted. This belongs in car 2.0, not car 1.0.

- More focus at all levels should go into the real everyday ethical issues of robocars, such as roads where getting around requires regularly violating the law (speeding, aggression etc.) in the way all human users already do.

- People writing about these problems should emphasize how rare they are, and when doing artificial scenarios, recount how artificial they are. Because of the public's fears and poor risk analysis, it is inappropriate to feed on those fears rather than be realistic.

Comments

Anonymous

Fri, 2016-10-14 13:00

Permalink

The law doesn't specify

The law doesn't specify right of way, it specifies failure to yield.

brad

Fri, 2016-10-14 13:02

Permalink

It does both

What you are failing to yield is right of way. The law outlines who had the right of way and who failed to yield it. But it does more. You have no right-of-way on the sidewalk (outside a driveway) and I do not think you are simply failing to yield to a pedestrian if you drive there.

hedgehog1

Fri, 2016-10-14 13:35

Permalink

Postponing Robocars is a real world 'Trolley Car' problem

Robocars will save a lot of lives: Fewer people will die on the roads if we ship them sooner - more people will die if we ship them later.

Postponing shipping of Robocars is a real world 'Trolley Car' problem. Five people will die per 10 million miles driven by a humans, where only one person will die per 10 million miles driven by Robocars.

If someone wants an ethical debate, perhaps this will put it in perceptive. Every time cars are delayed because of these kind of academic thought experiments/ethics exercises, four more persons per 10 million miles driven will die.

brad

Fri, 2016-10-14 13:59

Permalink

Meta-Trolley

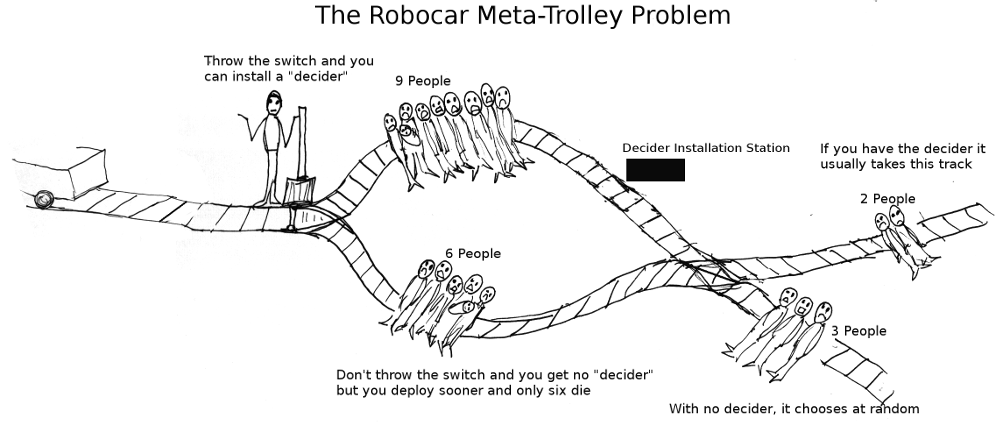

Yes, I call it the meta-trolley problem in my first essay on the subject, but ethicists are not buying it. Part of the Trolley lesson is about harm through inaction vs. harm from your deliberate decisions, and indeed the question of delay or extra capabilities and the lives saved or not saved is a factor here.

Though note that in the Trolley Problem, many conclude that those who are killed by others, even in greater numbers, are not as much your responsibility. Certainly nobody is pushing these questions with malice.

You might phrase it this way:

A trolley is coming down the tracks. On the main track 6 people are tied but you can throw a switch. It puts the trolley on a track where it can stop and install a "decider" for no-win situations. This takes time, and so more people will be killed while taking this route.

The two tracks merge and there is a decision point. Now the trolley with the decider can examine both tracks and pick the best one, normally the one with only 2 deaths on it. Without the decider it makes a random choice and kills 2.5 on average.

Do you throw the switch?

Jim Lieb

Fri, 2016-10-14 21:05

Permalink

Be very careful what you pray for. You might just get it...

I refer you to:

https://www.theguardian.com/technology/2016/oct/11/crash-how-computers-are-setting-us-up-disaster

I am now retired from software development (40 years in mostly research and networked systems) but before that I was an Air Traffic Controller and pilot. I also have some experience with railroads. I have seen a whole lot of crashes and worked a whole lot of emergencies. The premise that a robocar is safer than a human driver is false. It is false because it does not understand the real world operational issues involved with big heavy vehicles that operate around and carry valuable "objects" like people.

I saw recently that the Tesla's sensors can't find a grey colored semi in front of it on foggy days. Even my tired old eyes can do that. I may not be able to distinguish the edge between the grey back and the sky but I will recognize the darker bits, the plates, tires, door latches in various configurations and, because of years of visual experience and knowing the context of being on a foggy road, say to myself, "slow down and be prepared to stop because that is a big ass truck." Our brains have 1,000,000 years of development behind them with all of the failures, our distant cousins who did not recognize the big cat hiding in the tree over their heads, having been eaten by the predators they could not recognize quickly enough. Remember, the robocar sensors and code are orders of magnitude less sophisticated than the visual and hearing senses and predator avoidance reactions of even the simplest of higher vertebrates, let alone a dumber than a box of rocks human. What happens if the "observer" doesn't call in the construction detour?

Consider your jaywalking case. Many years ago my son "made a wrong turn" onto someone's back yard. He did it because he was an inexperienced driver and was not paying attention. Your case correct? He was fined and my insurance got more expensive. But what if instead of grabbing a piece of paper flying around the seat, he saw a little kid come out from behind a car to chase a ball into the street? Even your car would not stop in time, assuming it was smart enough to sense and recognize kids playing ball in the front yard. Going thru the fence and the citation would be the same in either case. But if he ran over the child, he'd be in jail for vehicular manslaughter and I would have lost my house. Is anyone on your dev team willing to accept that level of civil liability? The trolley problem is real. Even a driver dumber than a box of rocks would head into the weeds to avoid hitting a person. Are you willing to commit in writing that the robocar is ready to make that decision correctly and/or are the devs and their company willing to open your own wallets to cover the liability? I didn't think so. Those are the stakes and in the real, operational world, it is _all_ about corner cases.

Look at the case at the end of the article after reading the whole article. AF447 was not only the most automated aircraft in service but Air France has some of the best pilots in the world, an environment far safer and predictable than any street a robocar would be operating on. And they, all three well trained (or so we thought) pilots, were set up by the automated systems and flew a perfectly functioning modern aircraft right into the ocean. That article makes a very important point that anyone who has actually worked in transport operations would understand but most academics and enthusiastic product devs have no clue about.

Note the solution at the end of the article. It answers very well the problems the robocar aspires to solve. Monderman solved the problem not by adding more automation but by removing most of the very things that the automation stuff would want to use. Note his "party trick". If the robocar took his place the drivers, pedestrians, cyclists, dogs, and squirrels will just note that this dumb robocar is being confused and lost and drive, walk, bike, or bark appropriately. Even a driver dumber than a box of rocks would drive around to avoid it, this preserving the robocar's safety record for it. They could easily cope if the chaos caused it to segfault and stop right in the middle of the street.

Sorry to rain on the parade. I avoided AI and projects like this because I've had real world experience of hubris killing people who would not be dead if the principals were a bit more humble and realistic about what their real limitations were. I trust the automation in my EV car to decide when to turn on the gas motor to assist the batteries or how to take advantage of regen braking when I apply the brakes but it is properly my job to decide when to apply the accelerator or apply the brake.

brad

Sat, 2016-10-15 12:21

Permalink

Air France Crash

People talk about this one and crashes like it a lot. It's why Google decided to take the steering wheel out for their experiments.

But just to make one thing clear. "Safer than a human driver" isn't so much a premise as a requirement. As in, people are probably not going to release their cars out into production until they have demonstrated that to themselves and their lawyers and to the public. Until they have that they will, like Tesla, require constant human supervision. Some wonder if that supervision is possible of course, but I'm talking about what happens after the supervision is needed.

Safer than humans does not mean perfect of course, and one of the valid reasons for the ethics debate is to consider the public reaction, which will not be rational.

Brad2

Mon, 2016-10-24 09:28

Permalink

You do realize that AF447

You do realize that AF447 crashed because of the pilots' interference with each other and the autopilot system, right? This case is one of the reasons many SDC developers don't want a steering wheel.

Anonymous

Sun, 2016-10-16 13:43

Permalink

Trolly Problem

One of the problems with 'robo-car' development is that they, the developers, are having to apply objective judgement in a subjective world; The 'trolley problem' is just an extreme form of subjective judgement but that same subjective judgement applies to many, many situations that occur each day on the road.

brad

Sun, 2016-10-16 15:06

Permalink

Subjective yes...

We make many subjective judgments but the differences that humans when they make decisions on the road do so not with deliberate planning but more gut reactions. Machines only will have one style of thinking. Unlike a human they will make a very deliberate decision about what to do, and that will be the result of their programming (or machine learning) but it will be decided in advance, and that creates a new high bar for the vehicles to do something we don't ask of humans, a situation where we can blame the programmers for something we would not blame humans over.

Phillip Helbig

Mon, 2016-10-17 08:58

Permalink

The important point for many people

"Right now fatalities happen about every 2 million hours of driving, and the majority of those are single car fatalities (with fatigue and alcohol among leading causes.)"

An important point for many people, including myself, is that I can (and do) choose not to drive if I am tired and I never drink alcohol anyway. So my risk is lower than average. Whether it is lower than a Tesla on autopilot is another question, but by far the more interesting question.

Suppose there were a lottery where the state chose one person per year to be drawn and quartered in a public spectacle. Whether or not you oppose such an idea, I assume you agree that "any other cause of death is much more likely, so why make a big deal over this" is not an appropriate response.

Another aspect is the effort involved. Even if travelling by train is safer than travelling by car, it is still justifiable to complain about unnecessary deaths, perhaps due to cost-cutting on the part of the train company. The argument "cars are even less safe" doesn't cut it. There is an obvious conflict of interest here. Most car deaths are preventable, either by the person who died, in which case it is his own fault, or by the other person, which the dead person couldn't have prevented, but he understands the risks and realizes that, short of banning all cars including his, there is no practical way to prevent them (though in some cases harder punishment, better drivers' ed, etc might help). The average train passenger has no way to check how diligent the train company is, and even if there is a problem, it might be difficult to make a single person responsible.

brad

Mon, 2016-10-17 23:54

Permalink

Why make a deal

The draw and quarter argument is of course a silly one (though it appears frequently in Trolley problems.) There the state is murdering someone.

And the trolley fans are asking the car developers to also write code to murder somebody, which is also a terrible thing to ask. We are much more bothered by explicitly killing somebody than killing them "by default." So much so that we would call plowing into a jaywalker "an accident" but we would call "deciding to avoid 2 jaywalkers by running onto the sidewalk and killing one person" murder.

The government should not put this burden on the developers of these cars. The government should write laws that say where the cars have legal right of way. The developers should program their cars to stay in it unless they can safety depart it in the interests of safety. (ie. it's OK to swerve onto an empty sidewalk to avoid somebody, as that is a mostly safe thing to do but not OK to swerve onto a more lightly occupied sidewalk because that is not a safe thing to do, even if some might argue it is more moral.)

What I say when I write that we should not focus on these very rare events is that we already do have answers for them. The answers are simply not ideal in the view of some people. People don't even agree on what the best answers are. Because there already is an answer for what to do (stay in your ROW if you have no safe way out) and the situation will be very rare, it is wrong to demand a solution.

Tom Spuhler

Mon, 2016-10-17 15:35

Permalink

What if we could just STOP the Trolley or Robocar!

I share Brad's dismay with the obsessional pantings that have yet to die surrounding robocars and the so called “Trolley Problem” (TP). These Kobayashi Maru (Startrek) wanna-bes are trying to focus on the “decision”, seemingly hoping to expose some great unknown dark underbelly of human kind – which maybe it has. However. like Kirk, I'm thinking of solutions, not maiming and murdering gloriously.

The obvious is to STOP the car. Brad has already discussed measures being taken to assure brake availability. I've seen some other discussions of requiring brake plates, especially in riderless cars. Robocars could have the access and ability for more extreme measures such as side skids and the like. Maybe rapid tire deflation. Put it in park! If we expend resources on the topic, I propose it goes towards possibly extreme solutions with possibly greater applicability than caterwauling about nuns verses kitty cats.

What we need to decide and codify – if it doesn't already exist – is what level of discomfort or risk may passengers of a robocar be subjected to to protect other people, animals, infrastructure, things, the car and themselves – which is already a car maker concern. Each. And subclasses thereof will likely have different values. Some additional features such as airbags might be required to allow the robocar to better protect outsiders without injuring occupants.

For example: to protect any human life, robocar occupants may be subjected to forces no greater than those that might cause minor injuries – any level of physical damage to the robocar and minor infrastructure is acceptable. To avoid a small bird, occupants may be subjected to forces no greater that those that would spill coffee and only minor damage to the vehicle is acceptable. Note that this says that the robocar could trash its transmission, but not significantly injure its occupants in order to avoid injuring or killing that crosswalk full of nuns.

Some sort of codification might be required for these setting as it is probably in the best interest of society and the industry to NOT allow robocar occupants to have a setting that, for example, only allowed evasive maneuvers NOT sufficient to spill coffee to avoid running someone over. Note that one of the only conclusions of all these TP (trolley problem) studies seems to be that a significant percentage of potential robocar users want their robocar to be aggressive but want everyone else's to be deferential.

–

Other comments:

Brad, I like your stuff. If you have or create a much sorter version of your post that we could point people to, that would be handy, especially in this time of regulatory input requested.

GMcK

Mon, 2016-10-17 17:53

Permalink

Use the Law, that's why it's there

Back when I was doing research in artificial intelligence, I had colleagues who were trying to launch a program in AI and the Law, without a lot of success. FW Maitland's characterization of the law as a "seamless web" proved to be true, and it was just too hard to tease apart all the threads with the technology available in those days. I have no idea what's happened in that area since then.

There's room for half a dozen law review articles on the autonomous trolley topic, and anyone who wants to pontificate on the topic ought to be familiar with them, whenever those articles come into existence.

The net of relevant case law can be cast pretty broadly. I would expect there to be some fascinating cases involving actual trolleys and the liability of their human operators when there was a horseless carriage stalled on the tracks, with drunken drivers and invalid grandmothers who were unable to escape on their own. Could the operator be expected to know that the driver or the grandmother was incapacitated? What about injuries to the passengers in the trolley? In modern days, can an autonomous car be expected to know that its only passenger is a dog being sent to the vet when it prioritizes its own passengers ahead of pedestrians? Has PETA said anything about the sacrifice of those Iditarod sled dogs to save the lives of humans in Nome? How much was Casey Jones' intoxication with cocaine a mitigating factor in Mrs. Jones' wrongful death lawsuit against the switchman who allowed train 102 onto the wrong track?

What about the laws in other jurisdictions? How is liability assigned in California vs Nevada vs Texas vs Pennsylvania? What about European law? Conveniently, both Sweden (Volvo) and Germany (Mercedes, BMW, etc.) are part of the EU. Do national regulations and legal philosophies such as the Napoleonic basis of French law override EU ones? How will the rules change in the UK after Brexit? There's an interesting technical problem in how to modularize control systems to allow different legal and regulatory rules to be plugged in for different jurisdictions.

The philosophical discussions have shown that there are no simple, obvious solutions, so we're going to end up with a complicated set of legal rules and precedents. We need to understand sooner rather than later just how complicated those rules are that we're starting from, so that we can have a fact-based discussion about what needs to be changed, if anything.

brad

Tue, 2016-10-18 00:09

Permalink

There is a simple and obvious solution

The simple and obvious solution is to not attempt to generate specialized solutions very rare problems which have no right answer. The only winning move is not to play. Accept that simple default answers, like staying in your ROW may not match what people will answer in an ethics quiz, but because the situations are rare, there are other priorities.

We decide priorities all the time on safety. We decide not to put effort into preventing very rare things if the cost is high.

Boris

Fri, 2016-10-21 01:50

Permalink

robocars as transformed killer bots

This is the top-posted comment I put on my Google+ reshare of this post

Trolley problems solving cars are transformed killer bots.

This is shorthand for what I've to add to this comprehensive blog post, nicely lining ammo against what I've been railing against since it first came up. My take is that trolley problems in general and the obsession with having cars solve them, constitutes surreptitious PR for the military-industrial complex and for the engineering of autonomous killer robots. In a country that's fighting multiple wars.

Conspicuously enough, the first time came up the idea that autonomous cars should solve trolley problems, was just a couple weeks after leading researchers in robotics had signed a call for a moratorium on the development of autonomous killing machines, aka LAWS for Lethal Autonomous Weapons Systems, an acronym likely coined by a fan of Robocop.

To think of it, it's not wholly surprising that Obama would be easier to sway than the average clear-headed person given his direct involvement with killer drones warfare.

Hunt Lee

Thu, 2016-10-27 20:15

Permalink

The purpose of robocar build?

One of the reason why we are trying to build robocar is to know in advance the trolly problem & to handle it properly, I think.

I guess the robocar could predict the problem in 1 minutes or even 1 seconds before it occurs.

We are trying to find out the solution using lots of skills & equipments before we get encounter on trolley problem or so.

Add new comment