Critique of NHTSA's newly released regulations

The long awaited list of recommendations and potential regulations for Robocars has just been released by NHTSA, the federal agency that regulates car safety and safety issues in car manufacture. Normally, NHTSA does not regulate car technology before it is released into the market, and the agency, while it says it is wary of slowing down this safety-increasing technology, has decided to do the unprecedented -- and at a whopping 115 pages.

Broadly, this is very much the wrong direction. Nobody -- not Google, Uber, Ford, GM or certainly NHTSA -- knows the precise form of these cars will have when deployed. Almost surely something will change from our existing knowledge today. They know this, but still wish to move. Some of the larger players have pushed for regulation. Big companies like certainty. They want to know what the rules will be before they invest. Startups thrive better in the chaos, making up the rules as we go along.

Broadly, this is very much the wrong direction. Nobody -- not Google, Uber, Ford, GM or certainly NHTSA -- knows the precise form of these cars will have when deployed. Almost surely something will change from our existing knowledge today. They know this, but still wish to move. Some of the larger players have pushed for regulation. Big companies like certainty. They want to know what the rules will be before they invest. Startups thrive better in the chaos, making up the rules as we go along.

NHTSA hopes to define "best practices" but the best anybody can do in 2016 is lay down existing practices and conventional wisdom. The entirely new methods of providing safety that are yet to be invented won't be in such a definition.

The document is very detailed, so it will generate several blog posts of analysis. Here I present just initial reactions. Those reactions are broadly negative. This document is too detailed by an order of magnitude. Its regulations begin today, but fortunately they are also accepting public comment. The scope of the document is so large, however, that it seems extremely unlikely that they would scale back this document to the level it should be at. As such, the progress of robocar development in the USA may be seriously negatively affected.

Vehicle performance guidelines

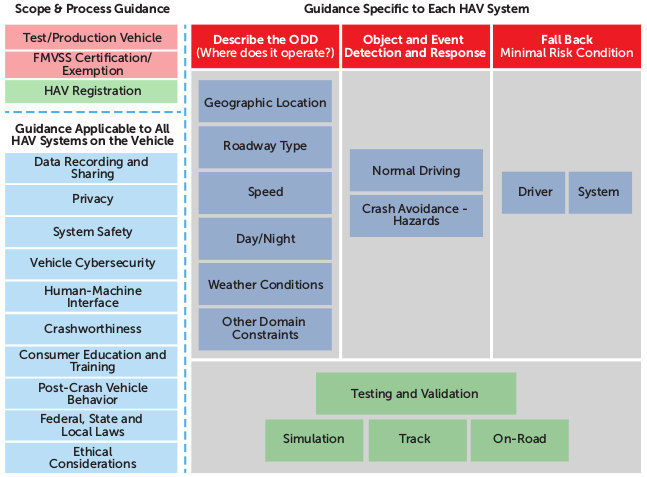

The first part of the regulations is a proposed 15 point safety standard. It must be certified (by the vendor) that the car meets these standards. NHTSA wants the power, according to an Op-Ed by no less than President Obama, to be able to pull cars from the road that don't meet these safety promises.

- Data Recording and Sharing

- Privacy

- System Safety

- Vehicle Cybersecurity

- Human Machine Interface

- Crashworthiness

- Consumer Education and Training

- Registration and Certification

- Post-Crash Behavior

- Federal, State and Local Laws

- Operational Design Domain

- Object and Event Detection and Response

- Fall Back (Minimal Risk Condition)

- Validation Methods

- Ethical Considerations

As you might guess, the most disturbing is the last one. As I have written many times, the issue of ethical "trolley problems" where cars must decide between killing one person or another are a philosophy class tool, not a guide to real world situations. Developers should spend as close to zero effort on these problems as possible, since they are not common enough to warrant special attention, if not for our morbid fascination with machines making life or death decisions in hypothetical situations. Let the policymakers answer these questions if they want to; programmers and vendors don't.

For the past couple of years, this has been a game that's kept people entertained and ethicists employed. The idea that government regulations might demand solutions to these problems before these cars can go on the road is appalling. If these regulations are written this way, we will delay saving lots of real lives in the interest of debating which highly hypothetical lives will be saved or harmed in ridiculously rare situations.

NHTSA's rules demand that ethical decisions be "made consciously and intentionally." Algorithms must be "transparent" and based on input from regulators, drivers, passengers and road users. While the section makes mention of machine learning techniques, it seems in the same breath to forbid them.

Most of the other rules are more innocuous. Of course all vendors will know and have little trouble listing what roads their car works on, and they will have extensive testing data on the car's perception system and how it handles every sort of failure. However, the requirement to keep the government constantly updated will be burdensome. Some vehicles will be adding streets to their route map literally ever day.

While I have been a professional privacy advocate, and I do care about just how the privacy of car users is protected, I am frankly not that concerned during the pilot project phase about how well this is done. I do want a good regime -- and even the ability to do anonymous taxi -- so it's perhaps not too bad to think about these things now, but I suspect these regulations will be fairly meaningless unless written in consultation with independent privacy advocates. The hard reality is that during the test phase, even a privacy advocate has to admit that the cars will need to make very extensive recordings of everything they can, so that any problems encountered can be studied and fixed and placed into the test suite.

50 state laws

NHTSA's plan has been partially endorsed by the self-driving coalition for safer streets (whose members include big players Ford, Google, Volvo, Uber and Lyft.) They like the fact that it has guidance for states on how to write their regulations, fearing that regulations may differ too much state to state. I have written that having 50 sets of rules may not be that bad an idea because jurisdictional competition can allow legal innovation and having software load new parameters as you drive over a border is not that hard.

In this document NHTSA asks the states to yield to the DOT on regulating robocar operation and performance. States should stick to registering cars, rules of the road, safety inspections and insurance. States will regulate human drivers as before, but the feds will regulate computer drivers.

States will still regulate testing, in theory, but the test cars must comply with the federal regulations.

New Authorities

A large part of the document just lists the legal justifications for NHTSA to regulate in this fashion and is primarily for policy wonks. Section 4, however, lists new authorities NHTSA is going to seek in order to do more regulation.

Some of the authorities they may see include:

- Pre-market safety assurance: Defining testing tools and methods to be used before selling

- Pre-market approval authority: Vendors would need approval from NHTSA before selling, rather than self-certifying compliance with the regulations

- Hybrid approaches of pre-market approval and self-certification

- Cease and desist authority: The ability to demand cars be taken off the road

- Exemption authority: An ability to grant rue exemptions for testing

- Post-sale authority to regulate software changes

- Much more

Other quick notes:

- NHTSA has abandoned their levels in favour of the SAE's. The SAE's were almost identical of course, with the addition of a "level 5" which is meaningless because it requires a vehicle that can drive literally everywhere, and there is not really a commercial reason to make a car at present that can do that.

- NHTSA is now pushing the acronym "HAV" (highly automated vehicle) as yet another contender in the large sea of names people use for this technology. (Self-driving car, driverless car, autonomous vehicle, automated vehicle, robocar etc.)

This was my preliminary report. More analysis can be found under the NHTSA tag.

Comments

Steve

Sat, 2016-09-24 02:50

Permalink

Convolutional confusion

To be fair most organisations dedicated to safety are usually overly cautious towards change and prefer slow incremental steps. The NHTSA are moving deep into uncharted territory and yet are openly encouraging the technology. I would at least give them full marks for their progressive stance, something you don't see much of in the public service.

One area that looks like an upcoming issue is "Algorithms must be transparent". You mentioned in an earlier article that convolutional neural networks are promising but have the issue of lacking transparency as it can be very difficult to find exactly where they went "wrong".

If I imagine a worse case scenario, say a driverless truck veering across a double line and killing most passenger in an oncoming bus (something that has happened due to drivers falling asleep), it would be bad if a software failure could not be precisely identified. I am guessing the trade off between the cost and quality of "black box" data recording is going to cause a few boardroom arguments.

brad

Wed, 2016-09-28 13:18

Permalink

This is the challenge

The dilemma we will face is comparison between the following two results:

Which one should we wish deployed? For overall public safety, the machine learning one is probably the answer. For transparency and public understanding, the first one is the answer.

I am not sure the government should make any statements at present about which is better, though.

Steve

Tue, 2016-10-04 02:11

Permalink

Liability vs Transparency

I agree that it is logical for the safest solution (assuming it is machine learning) not to be blocked by restrictive regulations demanding complete transparency.

However, in a scenario where a family has been wiped out by machine learning algorithms, people and the press will demand quick answers. It would be self defeating if the company's response was to hide behind lawyers and make vague statements that they have "re-educated" their AI system and it will never happen again.

While complete transparency may be impossible, fast and reasonably precise information on what mistakes the system made should be a goal. I would hope that the vehicle's system records exactly how it has classified all the surrounding elements and measures assigned to them just before an accident, and this information is quickly released to an independent authority,(probably not something their lawyer would suggest).

I don't know if large companies would be trusted enough to set up best practice procedures when liability is an issue without regulations forcing them. Things like the VW pollution scandal don't help public confidence yet excessive regulations always create further problems.

brad

Thu, 2016-10-06 05:09

Permalink

It depends how you build it

A full machine learning system would not necessarily have logs of how it classified all surrounding elements. A machine learning based classifier would, but if you attempt to combine the vision system and the decision system you may not.

It is possible that the liability would not be enough to keep companies safety-minded. However, usually we wait to see them fail before we regulate, as regulations also fail. In VWs's case, the regulations did not help. VW took deliberate steps to get around them. That is, however an exception for which they have been heavily punished. Or we should hope.

With safety though it is different from emissions. As I believe makers of self-driving cars will self-insure, if their cars have accidents, it is the makers who will pay. They have less motive to lie because they are lying to themselves.

John Fisher

Sat, 2016-10-01 15:05

Permalink

NHTSA docs

Great summary, though I haven't read them, and won't so thanks!

The point about the trolley problem is absolutely correct. It's a stupid red herring from people with zero understanding of the issues. I have been telling people that the cars will just pull over and stop when control fails. Its the obvious solution, its what humans do.

The point about large companies liking regs is excellent. I work for a medical robotics company, and though we are quite small, we are the biggest in our niche. With HIPPA we are regulated ( though hospitals can ignore the regs!) and I see it as both a burden and a barrier to entry.

But.

I can't imagine any small company doing autonomous cars. Tesla is the first successful automobile startup in the Western hemisphere since 1945, and its story is remarkable. Its impossible to make money in a high-tech consumer market without scale, and the barrier to entry, today, is huuuge ( sorry :) ) Sure there might someday be a car industry like computers with modular business pieces that can be combined ( see RaspberryPi). The current three-wheeler startups are, I believe, doomed. They can't make money at a low enough price-point. Not when you can buy a Korean car for $13k.

anyway, good articles!

Alejandro

Tue, 2016-12-13 05:30

Permalink

regulations for the grandstand (tribuna in Spanish)

I put this before, in the wrong place

Some posibilities.

1)The authorities needs to make regulations, because they are afraid to be guilty if something terrible (a robocar pushing a school bus to the abyss) , happens. Once they learn something about RC, regulations will change. What goverment try to avoid is a RC running without approval, and cannot approve something that they do not know which test must pass.

2) Unfortunately, it is more important to decide who is guilty and must pay,than to make the things to work fine, with near zero risk.

REGULATIONS can make more difficult to make RC that works in the way people wants. So deployment of RC could be delayed. Who wants that?

A)automakers are afraid to lose their profit, because do not know how to make RC, and depends in new just arrived companies that make something they do not know:software.

B)less tax money for local and national goverment.

C) a lot less money for insurance companies.

D) less money and even disappear, gasoline stations.

E) less money for petroleum companies

F) Dealers, mechanics, car body repairs, will disappear.

G) less money for emergency systems and hospitals

I will not comment about mostly regulations because many has no sense. Agree with you, Brad.

»

Add new comment