Let the policymakers handle the "trolley" problems

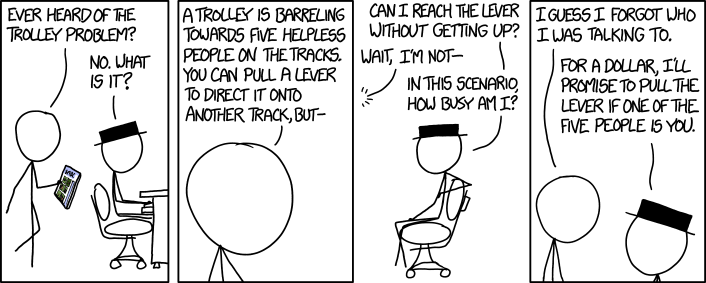

Submitted by brad on Thu, 2016-06-09 19:21When I give talks on robocars, the most common question, asked almost all the time, is the one known as the "trolley problem" question, "What will the car do if it has to choose between killing one person or another" or other related dilemmas. I have written frequently about how this is a very low priority question in reality, much more interesting to philosophy classes than it is important. It is a super-rare event and there are much more important everyday ethical questions that self-driving car developers have to solve long before they will tackle this one.

In spite of this, the question persists in the public mind. We are fascinated and afraid of the idea of machines making life or death decisions. The tiny number of humans faced with such dilemmas don't have a detailed ethical debate in their minds; they can only go with their "gut" or very simple and quick reasoning. We are troubled because machines don't have a difference between instant and carefully pondered reactions. The one time in billions of miles(*) that a machine faces such a question it would presumably make a calculated decision based on its programming. That's foreign to our nature, and indeed not a task desired by programmers or vendors of robocars.

There have been calls to come up with "ethical calculus" algorithms and put them in the cars. As a programmer, I could imagine coding such an algorithm, but I certainly would not want to, nor would I want to be held accountable for what it does, because by definition, it's going to do something bad. The programmer's job is to make driving safer. On their own, I think most builders of robocars would try to punt the decision elsewhere if possible. The simplest way to punt the decision is to program the car to follow the law, which generally means to stay in its right-of-way. Yes, that means running over 3 toddlers who ran into the road instead of veering onto the sidewalk to run over Hitler. Staying in our lane is what the law says to do, and you are not punished for doing it. The law strongly forbids going onto the sidewalk or another lane to deliberately hit something, no matter who you might be saving.

We might not like the law, but we do have the ability to change it.

Thus I propose the following: Driving regulators should create a special panel which can rule on driving ethics questions. If a robocar developer sees a question which requires some sort of ethical calculation whose answer is unclear, they can submit that question to the panel. The panel can deliberate and provide an answer. If the developer conforms to the ruling, they are absolved of responsibility. They did the right thing.

The panel would of course have people with technical skill on it, to make sure rulings are reasonable and can be implemented. Petitioners could also appeal rulings that would impede development, though they would probably suggest answers and describe their difficulty to the panel in any petition.

The panel would not simply be presented with questions like, "How do you choose between hitting 2 adults or one child?" It might make more sense to propose formulae for evaluating multiple different situations. In the end, it would need to be reduced to something you can do with code.

Very important to the rulings would be an understanding of how certain requirements could slow down robocar development or raise costs. For example, a ruling that car must make a decision based on the number of pedestrians it might hit demands it be able to count pedestrians. Today's robocars may often be unsure whether a blob is 2 or 3 pedestrians, and nobody cares because generally the result is the same -- you don't want to hit any number of pedestrians. Likeways, requirements to know the age of people on the road demands a great deal more of the car's perception system than anybody would normally develop, particularly if you imagine you will ask it to tell a dwarf adult from a child.

People with some level of identity (an address, a job) have ways to be accountable. If the damage rises to the level where refusing to fix it is a crime at some level, fear of the justice system might work, but it's unlikely the police are going to knock on somebody's door for throwing up in a car.

People with some level of identity (an address, a job) have ways to be accountable. If the damage rises to the level where refusing to fix it is a crime at some level, fear of the justice system might work, but it's unlikely the police are going to knock on somebody's door for throwing up in a car.

These networks are having their effect on robocar development. They are allowing

significant progress in the use of vision systems for robotics and driving, making

those progress much faster than expected. 2 years ago, I declared that the time when

vision systems would be good enough to build a safe robocar without lidar was still

fairly far away. That day has not yet arrived, but it is definitely closer, and it's

much harder to say it won't be soon. At the same time, LIDAR and other sensors are

improving and dropping in price. Quanergy (to whom I am an advisor) plans to ship $250

8-line LIDARS this year, and $100 high resolution LIDARS in the next couple of years.

These networks are having their effect on robocar development. They are allowing

significant progress in the use of vision systems for robotics and driving, making

those progress much faster than expected. 2 years ago, I declared that the time when

vision systems would be good enough to build a safe robocar without lidar was still

fairly far away. That day has not yet arrived, but it is definitely closer, and it's

much harder to say it won't be soon. At the same time, LIDAR and other sensors are

improving and dropping in price. Quanergy (to whom I am an advisor) plans to ship $250

8-line LIDARS this year, and $100 high resolution LIDARS in the next couple of years.